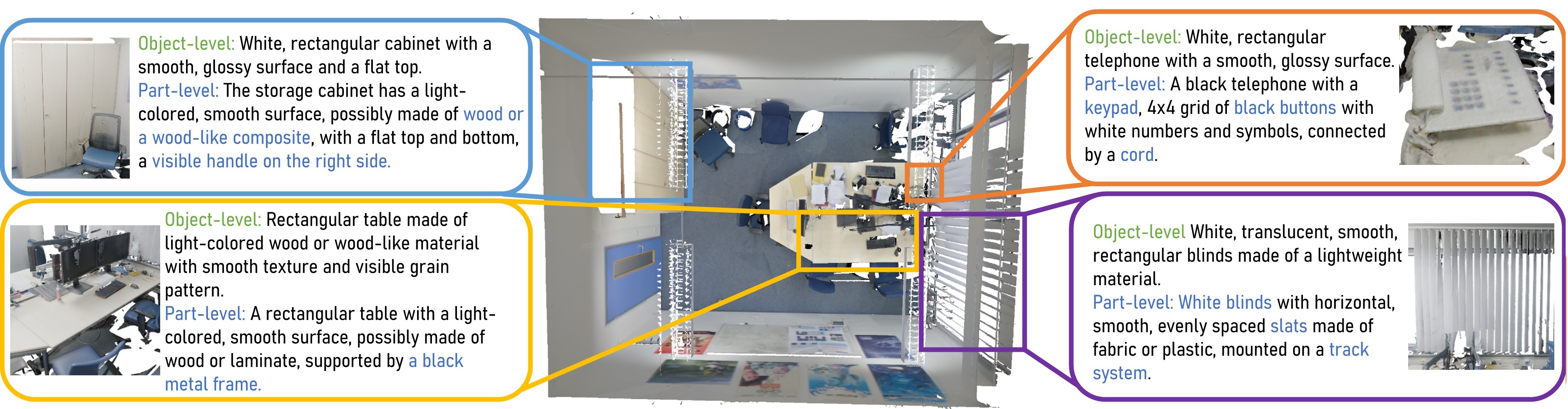

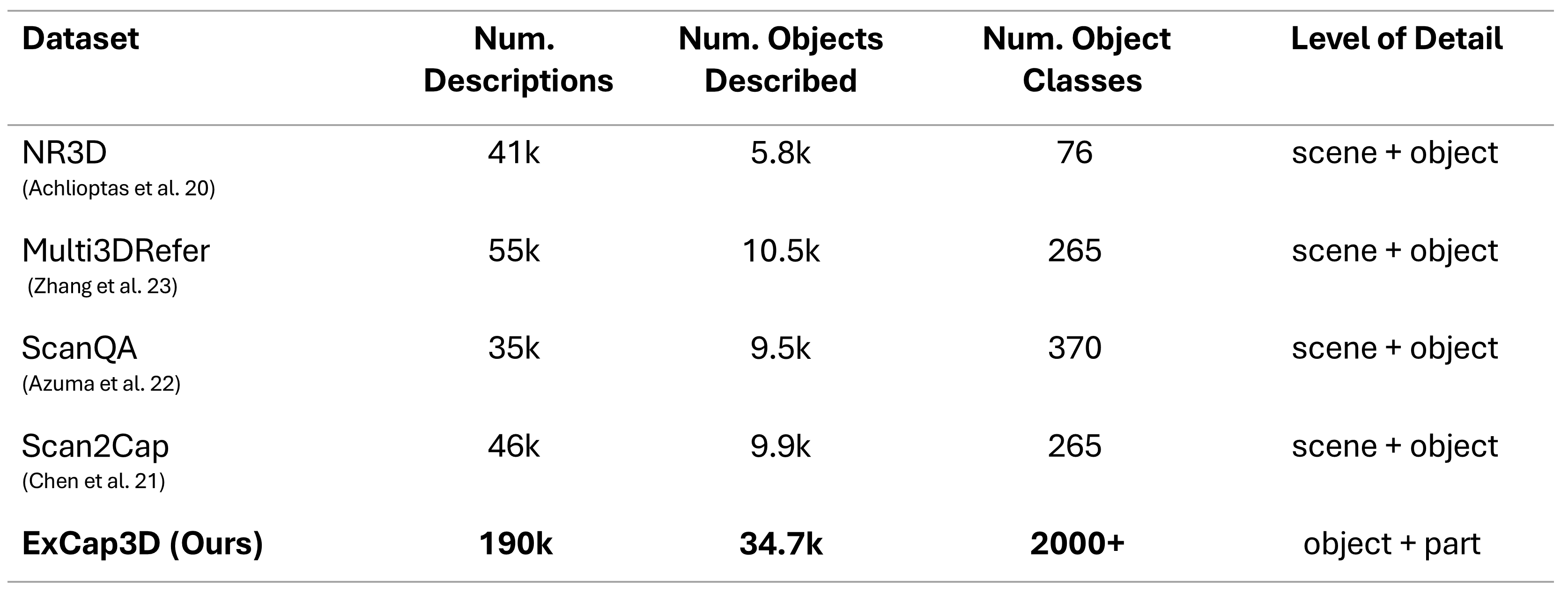

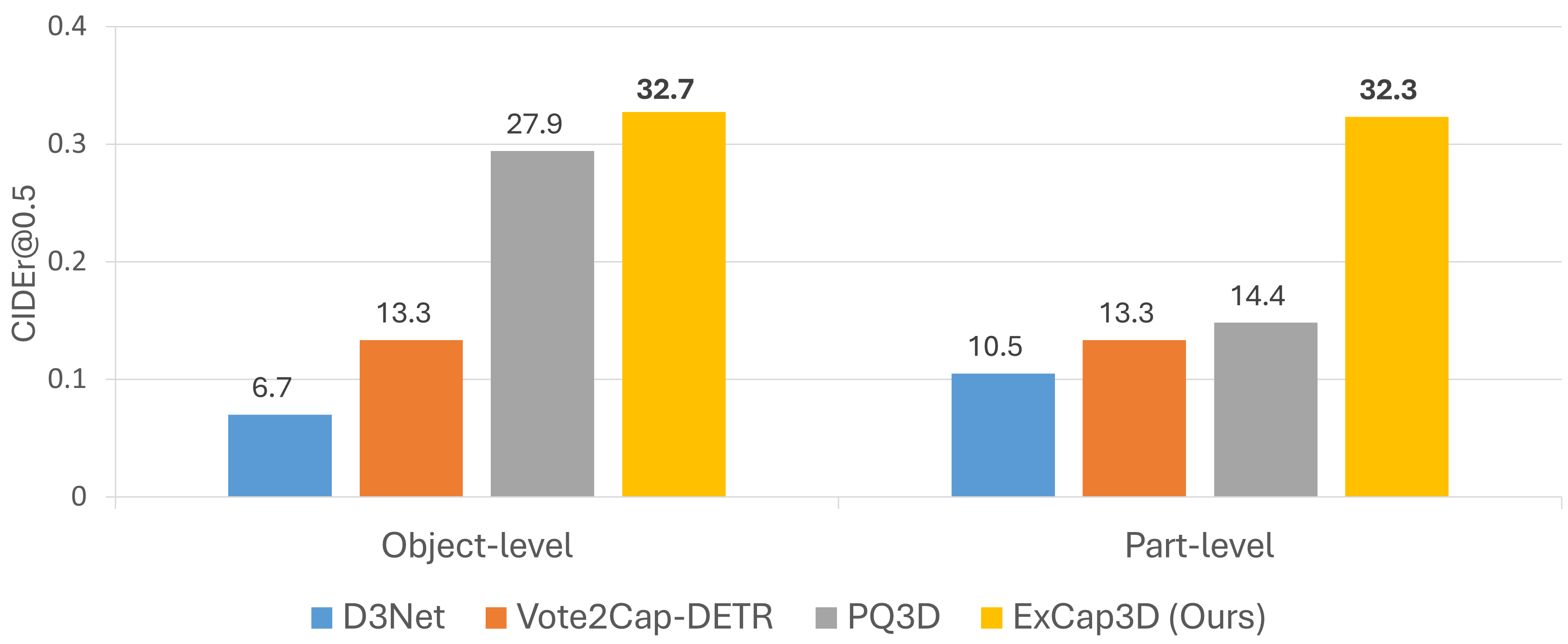

We present ExCap3D, an expressive 3D captioning model which takes as input a 3D scan, and for each detected object in the scan, generates a fine-grained collective description of the parts of the object, along with an object-level description conditioned on the part-level description. We design ExCap3D to encourage semantic consistency between the generated text descriptions, as well as textual similarity in the latent space, to further increase the quality of the generated captions. To enable this task, we generated the ExCap3D Dataset by leveraging a visual-language model (VLM) for multi-view captioning. ExCap3D Dataset contains captions on the ScanNet++ dataset with varying levels of detail, comprising 190k text descriptions of 34k 3D objects in 947 indoor scenes. The object- and part-level of detail captions generated by ExCap3D are of higher quality than those produced by state-of-the-art methods, with a Cider score improvement of 17% and 124% for object- and part-level details respectively.

@misc{yeshwanth2025excap3d,

title={ExCap3D: Expressive 3D Scene Understanding via Object Captioning with Varying Detail},

author={Chandan Yeshwanth and David Rozenberszkiand Angela Dai},

year={2025},

eprint={2503.17044},

archivePrefix={arXiv},

primaryClass={cs.CV},

url={https://arxiv.org/abs/2503.17044},

}