I am a PhD candidate at the 3D Understanding Lab at TUM advised by Prof. Angela Dai. I’m interested in 3D scene understanding and generation through multimodal data such as RGB(D), laser scans, and natural language.

I’m a co-author and maintainer of the ScanNet++ indoor scene dataset and benchmarks, and am interested in new applications for AR/VR using the dataset.

I completed my MSc. in Robotics, Cognition and Intelligence at TUM with a thesis on 3D-2D Contrastive Learning. Before that I worked at Siemens R&D India and studied at IIIT Bangalore.

When I’m not working, I like to cook, hike and play with the neighborhood cats.

Masters thesis / GR / IDP Supervision

Please send me an email if you’re interested in a thesis/GR/IDP. Topics are usually related to semantic/instance understanding on ScanNet++. For examples, see our group’s publications.

Selected Publications

ExCap3D: Expressive 3D Scene Understanding via Object Captioning with Varying Detail

ICCV 2025

Fine-grained object and part-level dense captioning of 3D scenes with Cider score improvement of 17% and 124% over baselines, using semantic and textual consistency losses, Large-scale dataset with 190k object and part-level captions of 34k 3D objects in the ScanNet++ dataset.

ScanNet++: A High-Fidelity Dataset of 3D Indoor Scenes

ICCV 2023 Oral

1000+ high-resolution indoor scenes with 3D laser scans, panoramic images, 33MP DSLR sequences and iPhone RGBD streams. All registered together, with fine-grained semantic and instance annotations. Includes public semantic and novel view synthesis benchmarks.

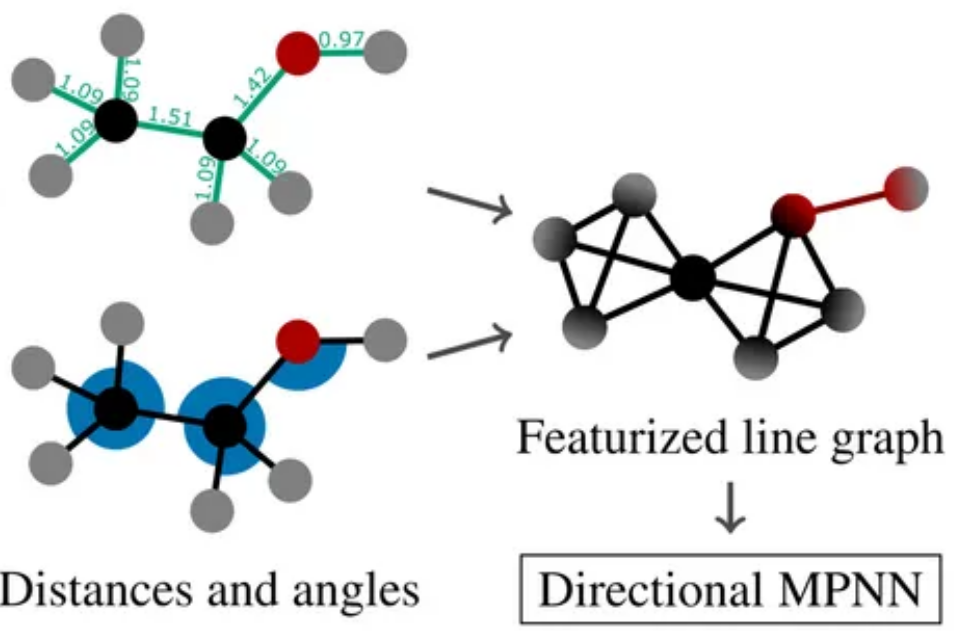

Directional Message Passing on Molecular Graphs via Synthetic Coordinates

NeurIPS 2021

Molecular property prediction using graph neural networks with synthetic coordinates -- distances and angles, as features edge features. State of the art on ZINC and coordinate-free QM9 by incorporating synthetic coordinates in the SMP and DimeNet++ models

Sceneformer: Indoor Scene Generation with Transformers

3DV 2021

Indoor 3D scene generation as sequence of object tokens using Transformers. Generates object location, semantic class, size, rotation and relevant properties by training on the SUNCG dataset. State-of-the-art scene generation validated by a user study and inference speed.